People have a natural desire to connect with one another. Brazilian educator and philosopher Paulo Freire famously said that "...only through communication can human life hold meaning"[1]. This need for communication has led to the development of a wide array of collaboration technologies. Cave paintings date back at least 50,000 years, and since that time, people have developed writing, carrier pigeons for messaging, the telegraph, radio, television, mobile phones, and the internet with social media. Today, we have access to many different tools that support multi-channel communication and messaging. It can be easier to talk to someone on the other side of the world than to the person who lives next door.

In recent years, several communication trends have started to emerge. First, there has been a movement towards experience capture and location sharing. Early video conferencing systems were focused on faces, trying to capture the remote person in the most natural way possible. However, cameras have moved from sharing faces to being mounted on the body and sharing places. The 1995 Camnet system from British Telecom was one of the first systems to use a head-mounted camera to live stream a user's view to a remote expert to help them perform a real-world task[2]. Today, there are many commercial smart glasses that have cameras in them that provide the same ability, and applications that allow people to share a first-person view from their mobile phones. More sophisticated technology allows for the capture and sharing of 360-degree video views[3], while new approaches such as Gaussian splatting support near real-time 3D reconstruction of real places[4]. The technology is on a trajectory where, within a short time, it should be possible to capture and share 3D copies of real spaces anywhere in the world. When viewed in Virtual Reality (VR), a remote person could feel like they are in the same space as the sender.

A second trend is a dramatic increase in network bandwidth supporting more natural collaboration. The 2400 baud modems and 56 Kbps phone line dial-up connections of the 1980s and 1990s have given way to gigabit fiber connections nearly 20,000 times faster. This has meant that earlier text-only communication and chat have been replaced by video, and now photorealistic virtual avatars. The virtual characters representing remote people can produce subtle facial expressions important for non-verbal communication[5], and volumetric video can be used to create photorealistic social VR experiences that allow dozens of people to interact in a very natural way[6]. Soon, we will be able to have virtual copies of remote people in our living rooms that are realistic enough that they seem like they are really there.

Finally, a third important trend is towards implicit understanding, or systems that watch and listen to a person and understand their state. For example, using machine learning, cameras, and microphones, it is possible for a computer to view a person's face in a video conference, listen to their voice, and have a good idea of how they are feeling[7]. Similarly, using more sophisticated physiological sensors such as heart rate monitors, eye tracking and EEG electrodes, allows cognitive load and attention to be measured and shared in collaborative applications[8]. In the future, systems will know what we intend to do before we do it, and provide support to more clearly communicate our intentions to others.

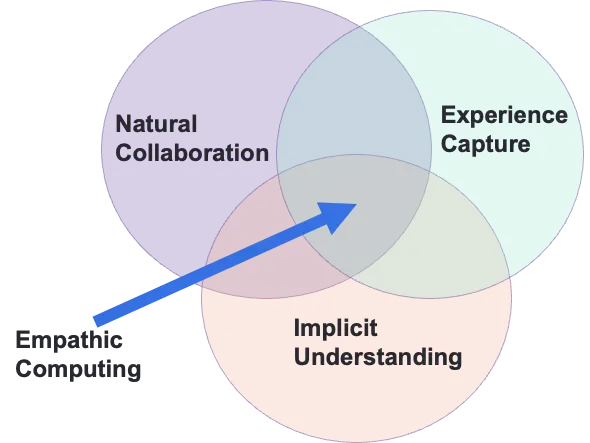

Taken together, these three trends are heading towards a future where collaborative systems know how we're feeling and what our surroundings are, and enable us to share rich communication cues. The overlap of these three areas is the new field of Empathic Computing (Figure 1). Empathic Computing can be defined as a research exploring how to enable people to share what they are seeing, hearing, and feeling with others, and thereby developing shared understanding or empathy. Psychologist Alfred Adler, famously wrote that "Empathy is Seeing with the Eyes of another, Listening with the Ears of another, and Feeling with the Heart of another.. ."[9]. Empathic Computing is about making that possible, regardless of where people are.

Figure 1. Empathic Computing, existing at the overlap of Natural Collaboration, Experience Capture and Implicit Understanding.

In general, Empathic Computing has three key aspects, aligned with the communication trends:

• Understanding: Understanding a person's feelings and emotions, using a wide range of sensors, physiological cues, and Artificial Intelligence (AI) and Machine Learning (ML) techniques. The field of Affective Computing[10] has researched this for 25 years, but mostly for individuals.

• Experiencing: Capturing and sharing the experience of others, using technology such as 360-degree cameras, depth sensors, and audio and video recording. VR provides an ideal technology for being immersed in the experience of others, although mostly for pre-recorded experiences.

• Sharing: Sharing rich communication cues, such as gaze, gesture, and body language. Augmented Reality (AR) has been shown to be an ideal technology for this, enabling remote people to share virtual cues and naturally communicate at a distance, while still seeing the real world.

Empathic Computing combines elements of AR, VR, AI, ML, physiological sensing, scene capture devices and other emerging technologies together in a way that supports creating shared understanding in real time. In each of these areas, and in Empathic Computing in general, there is significant research that can be done.

An early prototype of an Empathic Computing interface was the Empathy Glasses created by Masai et al.[11]. Wearable systems often have a camera attached to head-mounted display that allows a remote user to see the local worker's environment[2]. The Empathy Glasses were the first to add eye tracking, face tracking and a heart rate monitor as well, enabling the local worker to automatically share their eye gaze, facial expression and heart rate. People always look at objects before they interact with them, so the eye gaze provides an important implicit cue about what the local worker is about to do, removing the need for the remote user to be explicitly asking the local worker. A user study with the Empathy Glasses found that the gaze sharing created greater connection between the partners and stronger communication. The system used machine learning to understand the user's facial expression, allows the remote user to experience the local worker's environment, and used AR to share gaze and pointing communication cues. Thus, it provides an ideal example of an Empathic Computing interface and the potential of such systems to transform communication.

This brand-new journal is designed to provide a venue for the publication of work in Empathic Computing, and to help grow the field as a whole. In this inaugural issue, we feature five outstanding papers. First, Jinan et al.[12] present a review paper summarizing five years of the use of immersive technology for Empathic Computing, identifying trends in the field and, most importantly, areas for future research. Patel[13] contributes a perspective article exploring how to use AR and VR and emotion recognition systems to create safe spaces for children. Rivera et al.[14] write a paper describing how to integrate CAD functionality into a video conferencing application in a way that supports design reviews with the least usability friction. Tromp et al.[15] create a taxonomy of evidence-based medical extended reality (MXR) applications, reviewing over 350 applications and classifying them into 30 categories, including those that relate to collaboration for remote MXR-supported interactions. Finally, Morrison et al.[16] describe two virtual child simulators that can be used as immersive platforms for child-focused research, evoking empathetic responses and perspective-taking among caregivers.

This diverse collection of papers shows the wide range of topics that fall within the scope of Empathic Computing. Over the coming months, we are looking forward to receiving more excellent papers in the field, and in the coming years, growing the journal into a premier venue for Empathic Computing research. I hope that this journal can play an important role in showcasing the best of Empathic Computing and changing how people connect with each other in the future.

Authors contribution

The author contributed solely to the article.

Conflicts of interest

Mark Billinghurst is the Editor-in-Chief of Empathic Computing . No other conflicts of interest to declare.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Availability of data and materials

Not applicable.

Funding

None.

Copyright

© The Author(s) 2025.

References

-

1. Freire P. Pedagogy of the oppressed. New York: Continuum; 1990.

-

2. Garner P, Collins M, Webster SM, Rose DAD. The application of telepresence in medicine. BT Technol J. 1997;15:181-187.

[DOI] -

3. Yaqoob A, Bi T, Muntean GM. A survey on adaptive 360 video streaming: Solutions, challenges and opportunities. IEEE Commun Surv Tutor. 2020;22(4):2801-2838.

[DOI] -

4. Peng Z, Shao T, Liu Y, Zhou J, Yang Y, Wang J, et al. Rtg-slam: Real-time 3d reconstruction at scale using gaussian splatting. In: Burbano A, Zorin D, Jarosz W, editors. ACM SIGGRAPH 2024 Conference Papers; 2024 Jul 27-2024 Aug 1; Denver, USA. New York: Association for Computing Machinery; 2024. p. 1-11.

[DOI] -

5. Joachimczak M, Liu J, Ando H. Creating 3D personal avatars with high quality facial expressions for telecommunication and telepresence. In: 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW); 2022 Mar 12-16; Christchurch, New Zealand. Piscataway: IEEE; 2022. p. 856-857.

[DOI] -

6. Gunkel SN, Hindriks R, Assal KME, Stokking HM, Dijkstra-Soudarissanane S, Haar FT, et al. VRComm: an end-to-end web system for real-time photorealistic social VR communication. In: Alay Ö, Hsu CH, Begen AC, editors. Proceedings of the 12th ACM Multimedia Systems Conference; 2021 Sep 28-2021 Oct 1; Istanbul, Turkey. New York: Association for Computing Machinery; 2021. p. 65-79.

[DOI] -

7. Kumar H, Martin A. Multimodal Approach: Emotion Recognition from Audio and Video Modality. In: 2023 7th International Conference on Electronics, Communication and Aerospace Technology (ICECA); 2023 Nov 22-24; Coimbatore, India. Piscataway: IEEE; 2023. p. 954-961.

[DOI] -

8. Sasikumar P, Pai YS, Bai H, Billinghurst M. Pscvr: Physiological sensing in collaborative virtual reality. In: 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct); 2022 Oct 17-21; Singapore. Piscataway: IEEE; 2023. p. 663-666.

[DOI] -

9. Adler A. The education of children. 1st ed. London: Routledge; 1930.

-

10. Picard RW. Affective Computing for Future Agents. In: Klusch M, Kerschberg L, editors. Cooperative Information Agents IV - The Future of Information Agents in Cyberspace. Berlin: Springer. 2000.

[DOI] -

11. Masai K, Kunze K, Sugimoto M, Billinghurst M. Empathy Glasses. In: roceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA '16); 2016 May 7-12; California, USA. New York: Association for Computing Machinery; 2016. p. 1257-1263.

[DOI] -

12. Jinan UA, Heidarikohol N, Borst CW, Billinghurst M, Jung S. A systematic review of using immersive technologies for empathic computing from 2000-2024. Empath Comput. 2025;1(1):202501.

[DOI] -

13. Patel NJ. Creating safe environments for children: prevention of trauma in the Extended Verse. Empath Comput. 2025;1(1):202503.

[DOI] -

14. Rivera FG, Lamb M, Högberg D, Alenljung B. Friction situations in real-world remote design reviews when using CAD and videoconferencing tools. Empath Comput. 2025;1(1):128.

[DOI] -

15. Tromp JG, Raeisian Parvari, Le CV. Building a taxonomy of evidence-based medical eXtended Reality (MXR) applications: towards identifying best practices for design innovation and global collaboration. Empath Comput. 2025;1(1):109.

[DOI] -

16. Morrison S, Henderson AME, Lukosch H, White EJ, Bednarski F, Sagar M. From theory to practice: virtual children as platforms for research and training. Empath Comput. 2025;1(1):202408.

[DOI]

Copyright

© The Author(s) 2025. This is an Open Access article licensed under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, sharing, adaptation, distribution and reproduction in any medium or format, for any purpose, even commercially, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Publisher's Note

Share And Cite