Abstract

Aims: Storytelling has evolved alongside human culture, giving rise to new media such as social robots. While these robots employ modalities similar to those used by humans, they can also utilize non-biomimetic modalities, such as color, which are commonly associated with emotions. As research on the use of colored light in robotic storytelling remains limited, this study investigates its integration through three empirical studies.

Methods: We conducted three studies to explore the impact of colored light in robotic storytelling. The first study examined the effect of emotion-inducing colored lighting in romantic storytelling. The second study employed an online survey to determine appropriate light colors for specific emotions, based on images of the robot’s emotional expressions. The third study compared four lighting conditions in storytelling: emotion-driven colored lights, context-based colored lights, constant white light, and no additional lighting.

Results: The first study found that while colored lighting did not significantly influence storytelling experience or perception of the robot, it made recipients felt more serene. The second study showed improved recognition of amazement, rage, and neutral emotional states when colored light accompanied body language. The third study revealed no significant differences across lighting conditions in terms of storytelling experience, emotions, or robot perception; however, participants generally appreciated the use of colored lights. Emotion-driven lighting received slightly more favorable subjective evaluations.

Conclusion: Colored lighting can enhance the emotional expressiveness of robots. Both emotion- driven and context-based lighting strategies are appropriate for robotic storytelling. Through this series of studies, we contribute to the understanding of how colored lights are perceived in robotic communication, particularly within storytelling contexts.

Keywords

1. Introduction

While book sales are declining[1], interest in audiobooks and podcasts continues to grow[2]. One reasons for the success of this medium might be the rise of digital download collections[3]. Thanks to providers such as Spotify or Audible and their respective mobile applications, recipients can listen to storytelling wherever and whenever they want, making it an important part of their everyday lives[4]. A crucial aspect of storytelling is to tell stories in a way that others can relate to and emphasize[5]. This is not only achieved by presenting information about characters, settings, and plot[6], but also requires storytellers to bring the characters’ and stories’ emotions to life[7]. To do so, human storytellers use multiple modalities, such as voice, facial expressions, and gestures[8]. New technologies offer new possibilities for receiving stories. For example, video games allow players to actively experience a story instead of merely witnessing it[9]. Similarly, the relatively new medium of social robots, which can communicate intuitively and naturally[10], is well suited for storytelling, particularly due to its multimodal capacities[11]. While they are capable of using human modalities, so-called biomimetic modalities[12], such as speech, gaze, or body language, they can also utilize non-biomimetic modalities, such as sound effects and colors, to communicate[13]. Although colors are not a modality naturally employed by humans, they are associated with emotions[14]. Robots can easily integrate colors into their emotional expressions using light emitting diodes (LEDs)[15], thereby operationalizing the color modality in the form of colored light. If this approach proves effective for emotional expression, it would be especially appealing for low-budget robots, since colored lights are more affordable to install than biomimetic options such as motors for facial expressions.

While foundational research in Human-Robot Interaction (HRI) has explored integrating colored light into robotic emotion expressions[15-17], only a limited number of use cases, such as navigation tasks[18], have been tested. Many potential application areas, such as storytelling, remain largely unexplored. Steinhaeusser and Lugrin[19] examined the use of emotion-conveying LED eye colors of a storytelling robot, finding that participants in the control group, who interacted with a robot with constant white LED eye colors, had a more engaging storytelling experience. Extending the use of color from the robot’s LEDs to the room’s illumination, Steinhaeusser et al. demonstrated a positive effect of colored light on co-presence and the perception of a static robotic storyteller, even in the absence of body language[20].

Building on HRI research that recommends integrating motion and color for enhanced emotional expression[17], we conducted a series of studies combining colored lighting with emotion-expressive body language to further investigate the role of emotion-driven light in robotic storytelling. In the first study, we compared robotic storytelling enhanced with colored lighting, designed according to emotion induction guidelines from virtual environments, with storytelling using neutral or no additional lighting. Given the mixed results, we refined our approach and conducted an online study to further explore how colored room illumination influences human perception of robotic emotional expression. This study enabled us to empirically identify combinations of ambient lighting that support accurate recognition of robot-expressed emotions. In a third study, we applied these emotion-based lighting schemes and compared them with a contextual lighting approach, which aligned lighting colors with the environments described in the story. The findings indicate that both emotional and contextual lighting styles are suitable for robotic storytelling; however, participants showed a clear preference for the emotional lighting style, which we therefore recommended for future implementations.

2. Related Work

Storytelling can be defined in three dimensions: by its content, the development of coherent, temporally structured events; by its dialogic nature involving interaction between speaker and audience; and by the participation and responses of recipients[21,22]. Oral storytelling is inherently multimodal[23], as storytellers employ their voice, facial expressions, gestures, eye contact, and interactive engagement to connect the narrative with listeners[8]. These definitions highlight that receiving a story involves more than merely processing information; it is also about experiencing the emotions conveyed through the narrative and empathizing with the characters’ feelings and thoughts. The storyteller, in turn, bears the responsibility of crafting and delivering this emotional experience[7].

2.1 Emotions and storytelling experience

Broadly speaking, emotions are reactions to events[24,25]. Unlike moods which are typically low in intensity, diffuse in focus, and sustained over time, emotions are intense, short-term responses elicited by specific events[26]. Notably, even when individuals are aware of the artificial nature of a stimulus, they often react as if it were real[27,28]. Emotional theories can be categorized into dimensional models such as the Circumplex Model of Affect[29], which maps emotions along two axes: arousal (high or low) and valence (positive or negative); and discrete emotion models[24]. For instance, Plutchik’s Wheel of Emotions[30] proposes eight primary emotions, each with three levels of intensity. Similar emotions, such as ecstasy and admiration, are positioned close together, while opposing emotions, such as acceptance and disgust, are placed opposite one another.

Emotions play a crucial role in understanding a given text, particularly in the context of storytelling[31]. Moreover, story recipients rely on emotions content not only for comprehension but also for entertainment. For example, when encountering a humorous story, they expect to feel happiness, whereas a thriller or horror story is chosen to evoke feelings of fear or suspense. In essence, humans engage with fictional narratives to be emotionally moved. This emotional engagement is facilitated by the emotions expressed by the story or its characters, which in turn elicit emotional responses in the audience. “Although the emotions of fiction seem to happen to characters in a story, really, all the important emotions happen to [them]”[32]. These emotional responses are closely linked to the concept of transportation into a narrative[33]. Transportation is defined as a mental process in which recipients direct their cognitive resources (attention, imagery, and feelings) toward the story, thereby disconnecting from the real world and becoming absorbed in the narrative universe[34,35]. It has been shown to reduce negative cognitive reactions such as disbelief, enhance the perceived realism of the story, and intensify emotional responses toward characters[34]. Transportation also contributes to the enjoyment and perceived meaningfulness of a media artifact[36], making it a fundamental component of the storytelling experience. Importantly, this effect is not limited to traditional media but can emerge across various narrative formats[34], including novel platforms such as social robots[11].

2.2 Social robotics

Social robots are designed for natural interaction, enabling communication through both verbal and non-verbal modalities[10]. In doing so, they elicit social responses from human users not only on a cognitive level but also on an emotional one[10,37]. With their focus on social interactivity[38], social robots can be employed in various domains such as education[39,40] and entertainment[41,42]. To effectively engage in social interactions, social robots should exhibit anthropomorphic qualities[43]; that is, their form and behavior should be designed in a human-like manner to evoke anthropomorphism—the tendency to attribute human characteristics, such as gender or personality, to non-human entities like robots[44]. Therefore, it is recommended that social robots adopt human-like morphology[45,46], for example, by including features such as arms and legs, and by displaying social communication behaviors such as body language and social gaze[47,48]. These elements underscore the importance of mimicking human attributes, a design principle known as biomimetics[12].

These biomimetic modalities are also essential for storytelling robots. Several studies have shown that robotic storytellers employing emotional facial expressions congruent with the story content can enhance recipients’ transportation into the narrative[11,49], as well as increase the likeability of the robotic storyteller itself[49]. Moreover, the integration of multiple modalities appears to be as important in HRI as it is in human-human communication. As noted, “When communicating using their full multi-modal expressive potential, speakers can increase communicative efficiency by simultaneously transporting complementary information, and foster robustness by providing redundant information in various modalities”[50], a concept known as multimodality. In line, Ham et al.[51] found that a robot’s persuasiveness in a moral context can be improved by combining gaze with concurrent gestures during speech, thereby demonstrating the applicability of multimodal benefits to HRI.

2.3 Colored light as a modality

In contrast to the biomimetic modalities discussed above—those modeled after human behavior—robots are also capable of expressing emotions through non-biomimetic modalities that lack direct biological analogues, such as the use of colored light[12]. In a preliminary use case, Rea et al.[52] combined both light and color to enable guests in a café to program a non-humanoid Roomba utility robot, equipped with an LED strip, to represent their mood using colored lights. Research further suggests that emotional expression through multimodal robot behaviors can be enhanced by the addition of color[17], indicating that findings from color psychology may be applicable to HRI[15]. For example, using the ball-shaped robot Maru, Song and Yamada[13] confirmed associations such as blue with sadness or red and anger in robotic emotional expressions. In a subsequent study, they combined light color and dynamic patterns to create and validate emotion expressions using a Roomba robot with an LED strip[15]. Again, principles from color psychology were validated, for instance, an intensely blinking red light was perceived as conveying hostility. They also found that expressive lighting enhanced the emotional interpretability of in-situ motion cues[53]. Additional studies employing robots specifically designed for multimodal emotional expression, such as those incorporating colored, blinking lights into robot’s ears[54] or chest[55], have likewise reported successful emotion communication using these visual cues.

Several robots, such as Sony’s AIBO robot dog and Aldebaran’s humanoid NAO robot, are equipped with colored lights, typically installed in their eyes, to support emotional expression. However, studies examining the effectiveness of the NAO robot’s eye LEDs for conveying emotion have reported rather negative outcomes[56,57]. In the specific context of storytelling, a preliminary study by Steinhaeusser et al.[19] found that the use of emotionally colored eye LEDs on the NAO robot negatively affected the storytelling experience. This was reflected in a reduction in cognitive absorption—a state of cognitive and emotional engagement with technology, comparable to flow[58,59], and characterized by factors such as attention and curiosity[60]—as well as decrease in the perceived animacy of the robotic storyteller. The authors attribute these effects to the distracting nature of applying a non-biomimetic modality (colored light) to an anthropomorphic body part. They propose expanding the illuminated area to surface such as walls or floors, as demonstrated by Betella et al.[61]. This approach, projecting emotion-associated colors into the environment, has already been explored in audiobook storytelling, where lighting was dynamically adjusted to match the narrator’s emotional tone[62].

However, colors and colored light can not only reinforce emotional expression but can also induce emotions[63,64]. In films, for example, color is deliberately used to evoke specific emotional responses in viewers[65,66], a technique that has also been adopted in other media such as video games[67]. In a study manipulating the background color of a video game, Wolfson and Case[68] found that players exhibited different cognitive and physiological responses to the colors blue and red. Specifically, while performance steadily improved with a blue background, it stagnated under red. The authors attributed these differences to variations in arousal levels induced by the colors, as evidenced by heart rate measurements. Similar results were reported by Joosten et al.[69], who manipulated ambient light color in a fantasy role-playing game’s virtual environment: reddish light elicited negative valenced arousal, whereas yellow light was experienced as positively valenced. Steinhaeusser et al.[67] synthesized such findings on the emotional impact of color and light into a set of design guidelines for emotion-inducing virtual environments, which were validated in both desktop and immersive VR settings[67,70]. Applying these guidelines to storytelling with both a physically embodied and a virtual robot, Steinhaeusser et al.[20] demonstrated that the addition of emotion-inducing light enhanced perceptions of the robot’s social presence and overall robot perception. These results highlight the potential benefits of emotion-inducing colored light in robotic storytelling as well.

3. Contribution

Given the significant potential of the color modality, realized through colored light, in emotional robotic storytelling, this work seeks to further explore this relatively under-investigated channel. In light of the importance of multimodality and existing recommendations to combine motion with color, we conducted a series of studies examining the integration of colored light and expressive body language in a robotic storytelling context. Our investigation focuses on three key aspects: the storytelling experience, emotion induction, and robot perception.

First (Study I; see chapter 4), we extended the approach of Steinhaeusser et al.[20] by implementing emotion-driven colored lighting in robotic storytelling, guided by design principles from virtual environments and combined with emotional bodily expressions. To evaluate the effects, we conducted a laboratory user study comparing three conditions: robotic storytelling with emotion-driven colored lighting, with constant white lighting, and with no additional lighting. As we were not able to replicate the findings of Steinhaeusser et al., we adapted our lighting approach to better suit the context of our expressive social robot, shifting our focus from emotion induction to emotion recognition. Consequently, we conducted an online study (Study II; see chapter 5) aimed at identifying suitable light colors that support the robot’s emotional bodily expressions.

The empirically derived emotion-matching light colors were subsequently applied in an evaluative user study (Study III; see chapter 6). In this study, we not only assessed the effectiveness of our emotion-driven lighting approach but also compared it to a context-based lighting strategy. Specifically, we compared four storytelling i conditions: one using emotion-driven colored lighting, one using context-based lighting aligned with the story’s environmental setting, one with constant white lighting, and one without any additional lighting. Our findings indicate that while overall storytelling experience, emotion induction, and robot perception showed minimal differences across conditions, participants expressed a clear preference for robotic storytelling integrating that incorporated colored lighting over versions with constant or no lighting enhancements. Both lighting strategies, namely emotion-driven and context-based approaches, proved suitable for robotic storytelling; however, qualitative feedback slightly favored the emotion-driven approach. Therefore, we recommend integrating emotion-driven colored lighting into robotic storytelling, while acknowledging the general effectiveness of both approaches.

4. Study I: Embedding Light in Multimodal Robotic Storytelling

To gain initial insights, we conducted a laboratory study examining the combination of expressive body language and emotion-inducing colored light in robotic storytelling. A romantic story was presented under three conditions: colored light, constant white light, and no additional lighting. Colored lighting was hypothesized to enhance the robot’s expressiveness and directly influence recipients’ emotional states. As prior studies have shown that storytelling experience is improved by the integration of expressive biomimetic modalities in robotic storyteller[11,49] and that the emotional impact of stories contributes significantly to narrative engagement[71] which in turn is positively associated with transportation-related effects[33], we anticipated that adding emotion-inducing colored light would enhance the storytelling experience.

• H1a: Transportation will be higher in the adaptive lighting condition compared to the constant white or no lighting conditions.

• H1b: Cognitive absorption will be higher in the adaptive lighting condition compared to the constant white or no lighting conditions.

When engaging with a story, recipients often adopt the emotions of the characters, as conveyed by the storyteller, due to empathetic processes[7,32,72]. Given that we selected a romantic story designed to elicit emotions of positive valence[32], we expect participants to report increased positive emotions and decreased negative emotions following the storytelling session.

• H2a: Positive affect will be higher after receiving the story.

• H2b: Negative affect will be lower after receiving the story.

• H2c: Joviality will be higher after receiving the story.

• H2d: Serenity will be higher after receiving the story.

Previous research has shown that colored lights can enhance a robot’s ability to express emotions[53]. Therefore, we propose that when a robotic storyteller employs multimodal emotional expressions, including colored lighting, to bring the story and its characters to life, recipients’ emotional responses and affective states may likewise be influenced.

• RQ1: Do changes in emotional differ across the lighting conditions?

• RQ2: Does attentiveness vary between the lighting conditions?

As Steinhaeusser et al.[20] demonstrated a positive effect of the light modality on the perception of robotic storyteller, we further examine general robot perception across several dimensions, including anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety.

• RQ3 : Does the perception of the robot differ across the lighting conditions?

4.1 Materials

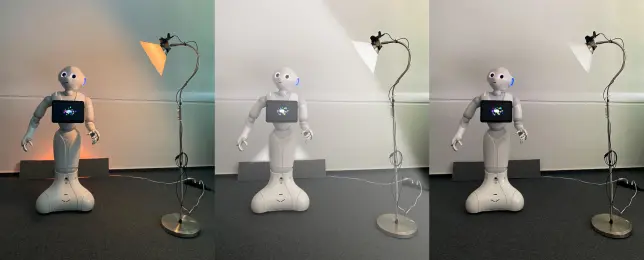

To examine the impact of lighting on recipients’ storytelling experience, emotional responses, and robot perception, we implemented three versions of a romantic story narrated by a social robot: (1) storytelling with adaptive colored lighting based on established guidelines for emotion-inducing lighting in virtual environments[67], (2) storytelling with constant white lighting, and (3) storytelling without any additional lighting. Representative snapshots of each condition are shown in Figure 1.

Figure 1. Snapshot of Pepper robot during storytelling expressing admiration, conditions f.l.t.r.: adaptive lighting, constant lighting, no additional light.

4.1.1 Concept

We selected a romantic story due to the high popularity of this genre, particularly among female participants[73], who were expected to be well-represented in our study sample. The short narrative centers on a girl meeting a boy at the beach, with both gradually falling in love. An English translation of the story is provided in Supplementary materials. The story was delivered by the Pepper robot had a duration of approximately six minutes.

First, the story was annotated with respect to emotional content. This annotation was conducted in a prior project, from which we reused the resulting data. The story was tokenized at the clause level, using punctuation marks such as commas or full stops as segmentation points. A total of 89 individual annotators (61 female, 28 male, 0 diverse; age: M = 21.98, SD = 2.09) labeled each token with the emotion they believed a storyteller should express when narrating that part of the story. We employed the eight primary emotions from Plutchik’s Wheel of Emotions[74], as along with an additional “neutral” label and an “I don’t know” option. Given the relatively large number of annotators compared to related studies[20,75,76], we applied a more conservative approach to determine consensus labels. Specifically, we calculated 95% confidence intervals (CIs) for the frequency of each label and retained only the most frequently selected emotion labels whose CIs did not overlap. If no label met this criterion, the token was labeled as “neutral”. Using this method, 55 tokens were assigned specific emotion labels, while 45 tokens were labeled as “neutral”.

Next, we conceptualized the robot’s behavior. For body language, we employed emotional bodily expressions for the Pepper robot that had been pre-tested by Steinhaeusser et al.[75] (recognition rates are presented in Table 1; corresponding images of the body language are provided in Supplementary materials). As the authors’ set of expressions corresponds to the eight primary emotions from Plutchik’s Wheel of Emotions, we were able to directly align the expressions with the story tokens labeled with the respective emotions.

| Emotion Label | Color Combination | RR UM[89] | ||

| Version | RR MM all | RR MM clean | ||

| Vigilance | 1 2 3 | 5.94% 10.89% 10.89% | 6.82% 11.96% 12.09% | 26.67% |

| Admiration | 1 2 3 | 17.82% 25.74% 23.76% | 18.95% 27.66% 24.74% | 31.11% |

| Amazement | 1 2 3 | 30.69% 33.66% 42.57% | 32.39% 36.96% 47.25% | 44.44% |

| Ecstasy | 1 2 3 | 43.56% 32.67% 49.50% | 44.90% 33.33% 50.51% | 64.44% |

| Loathing | 1 2 3 | 11.88% 12.87% 8.91% | 12.50% 13.27% 9.09% | 68.89% |

| Terror | 1 2 3 | 41.58% 46.53% 49.50% | 43.75% 51.09% 53.19% | 82.22% |

| Rage | 1 2 3 | 55.45% 72.29% 68.32% | 56.57% 72.28% 70.41% | 60.00% |

| Grief | 1 2 3 | 64.36% 79.21% 71.29% | 65.66% 80.00% 72.00% | 97.78% |

| Neutral | 1 | 68.32% | 68.32% | 31.11% |

RR: recognition rates; MM: multimodal; UM: unimodal; all: including all labels; clean: without “i don’t know” option.

Finally, we defined the color settings for the adaptive lighting condition. As prior research[20] suggests that design guidelines for emotion-inducing lighting in virtual environments can be effectively applied to robotic storytelling, we adopted this approach and conceptualized illumination settings for each emotion label based on the guidelines proposed by Steinhaeusser et al.[67]. However, unlike previous studies that used only a spotlight directed at the robot[20], we additionally incorporated ambient lighting that illuminated the wall behind the robot, as recommended by Steinhaeusser and Lugrin[19]. The specific color settings for each emotion are detailed in Table 2. For the neutral label, we used white ambient light (hue 0, saturation 0, brightness 100) and turned off the spotlight. Since amazement can carry both positive and negative valence[77], we applied the same lighting configuration as for the neutral condition. As vigilance and loathing were not selected during the annotation process, presumably because these emotions were not expressed in the story, no lighting was configured for them.

| Emotion Label | Spotlight | Ambient Light | Guideline number from[67] | ||

| Hue | Saturation | Hue | Saturation | ||

| Ecstasy | 35 | 100 | 35 | 100 | GLpos 12: warm and balanced light, sunset colors |

| Admiration | 60 | 40 | 50 | 100 | GLpos 8, GLpos 9, GLpos 11: bright or pastel colors, yellowish sunlight |

| Terror | 180 | 100 | 10 | 100 | GLneg 5, GLneg 9, GLneg 10: warm reddish and cool blueish imbalanced lights |

| Grief | 205 | 40 | 205 | 60 | GLneg 8, GLneg 9: blueish and greyish dim light |

| Rage | 0 | 100 | 0 | 100 | GLneg 5, GLneg 9: warm light and red highlights |

4.1.2 Implementation

The storytelling sequence was implemented using Unity version 2019.1.14f1. We employed the Pepper Python Unity Toolkit[78] to send commands to the robot. The toolkit relies on the robot’s internal speech synthesis engine; thus, no modifications were made to voice modulation. Each story token was transmitted as a text-to-speech command along with encapsulated functions containing the predefined parameters for the corresponding emotional posture, based on the emotion label assigned to the token (see Section 4.1.1). This setup constituted the control condition, in which no additional illumination was provided.

To implement the additional lighting, we used two Tapo E27 smart bulbs: a spotlight directed at the robot and an ambient light positioned behind it, as illustrated in Figure 1. The smart lights were integrated with Unity utilizing a Python 3.0 server, which communicated with Unity using the PyP100 module. In the constant-light condition, a command was sent at the beginning of the storytelling session to set both the spotlight and ambient light to white. In the adaptive, emotion-inducing lighting condition, we defined individual functions for each emotion label, each encapsulating the corresponding spotlight and ambient light color settings. The function matching the emotion label of the current token was called concurrently with sending the token to the robot. For most emotion labels, except ecstasy and rage, which were represented by two yellow and two red lights, respectively, with the spotlight and ambient light used different colors (Table 2). For instance, grief was represented by two distinct shades of blue, while terror combined a warm reddish ambient light with a cool blueish spotlight. The final stimuli had a total duration of approximately five minutes in length.

4.2 Methods

To investigate the effects of different conditions in robotic storytelling, we conducted a laboratory study. Using a between-subjects design, we compared the influence of (1) adaptive emotion-driven colored lighting, (2) constant white light, and (3) the absence of additional lighting on recipients’ storytelling experience, emotional responses, and perception of the robotic storyteller. These conditions were based on the three storytelling sequences described in section 4.1. The study was reviewed and approved as ethically sound by the local ethics committee.

4.2.1 Measures

To assess storytelling experience, transportation was measured using the Transportation Scale Short Form (TS-SF)[79], which consists of six items (e.g., “I wanted to learn how the narrative ended”). Participants responded on a 7-point Likert scale ranging from 1 (“not at all”) to 7 (“very much”). Appel et al.[79] reported Cronbach’s alpha values between .80 to .87 for the TS-SF; for the current sample, internal consistency was similarly high with a Cronbach’s alpha of .86.

Further, the Cognitive Absorption (CA) questionnaire was used to measure the recipients’ level of involvement in the robotic storytelling experience. Originally developed by Agarwal et al.[58] to investigate engagement in web and software usage, the questionnaire was adapted to the robotic storytelling context following the approach of Steinhaeusser and Lugrin[19]. It comprises five scales, the (1) Temporal Dissociation scale which originally includes five items but was cut down to three items that refer to the recipients’ current feeling of time during the interaction with the robot, e.g., “Time flew while the robot told the story”, the (2) Focused Immersion scale, comprising five items, e.g., “While listening to the robot, I got distracted by other things very easily”, the (3) Heightened Enjoyment scale with four items, e.g., “I enjoyed using the robot”, the (4) Control scale including three items, e.g., “I felt that I have no control while listening to the robot”, and the (5) Curiosity scale which includes three items, e.g., “Listening to the robot made me curious”. All items were rated on a seven-point Likert scale (1: “Strongly disagree”, 4: “Neutral”, and 7: “Strongly agree”). Agarwal et al.[58] reported reliability values of .93 for the Temporal Dissociation as well as the Heightened Enjoyment and Curiosity scale; .88 for the Focused Immersion scale, and .83 for the Control scale. In the current sample, Cronbach’s alpha was .88 for the Temporal Dissociation and Focused Immersion scales, .92 for the Heightened Enjoyment scale, .62 for the Control scale, and .90 for the Curiosity scale.

Recipients’ emotions were measured using the Positive and Negative Affect Schedule-Expanded Form (PANAS-X)[80,81]. In more detail, we utilized the Positive Affect scale (10 items, e.g. “enthusiastic”) and Negative Affect scale (10 items, e.g. “nervous”) to obtain a general overview as well as the scales concerning Joviality (8 items, e.g. “cheerful”), and Serenity scale (3 items, e.g. “relaxed”). Furthermore, we measured Attentiveness (4 items, e.g. “attentive”) to examine the influence of the robotic storytelling on the participants’ attention. Each item was presented with a five-point Likert scale (1: “very slightly or not at all”, 2: “a little”, 3: “moderately”, 4: “quite a bit”, 5: “extremly”). Watson and Clark[81] reported internal consistency of .83 to .88 for Positive Affect, .85 to .91 for Negative Affect, .93 for Joviality, .74 for Serenity, and .72 for Attentiveness measuring the emotional state at the moment. Values for the current study were .90 for Positive Affect, .82 to .93 for Negative Affect, .92 to .94 for Joviality, .75 to .77 for Serenity, and .79 to .80 for Attentiveness. Robot perception was measured using the Godspeed questionnaire[82]. It includes five scales measured on five-point semantic differentials: (1) Anthropomorphism (5 items, e.g., “machinelike” versus “humanlike”), (2) Animacy (6 items, e.g. “mechanical” versus “organic”), (3) Likeability (5 items, e.g., “unfriendly” versus “friendly”), (4) Perceived Intelligence (5 items, e.g., “foolish” versus “sensible”), and (5) Perceived Safety (3 items, e.g., “anxious” versus “relaxed”). Bartneck et al. reported Cronbach’s alpha of .88 to .93 for Anthropomorphism, .70 for Animacy, .87 to .92 for Likeability, and .75 to .77 for Perceived Intelligence. No internal consistency value was reported for Perceived Safety.In the current sample, Cronbach’s alpha values were .80 for Anthropomorphism, .79 for Animacy, .83 for Likeability, .80 for Perceived Intelligence, and .63 for Perceived Safety. Lastly, participants provided demographic data, i.e. age and gender, and were invited to leave a comment.

4.2.2 Procedure

When arriving at the lab, participants first provided informed consent to take part in the study. Next, they completed the first part of the emotion questionnaire. Participants were then randomly assigned to one of the three conditions and received the robotic storytelling either with adaptive, emotion-driven lighting, constant illumination, or no additional lighting. Afterward, they completed the rest of the questionnaire. Lastly, they were thanked and fully debriefed about the aim of the study.

4.2.3 Participants

Overall, 98 participants were recruited. However, six of them had to be excluded due to technical issues with the Pepper robot or the smart lighting system, such as lost connections. Thus, data from 92 participants were analyzed (mean age = 21.99 years, SD = 2.44). The majority of 73 participants self-identified as female (age: M = 21.82, SD = 2.33), whereas only 19 participants self-identified as male (age: M = 22.63, SD = 2.81). No participants identified as non-binary or another gender. Being randomly assigned to the conditions, 30 participants received the storytelling with constant lighting (25 female, 5 male; age: M = 21.53, SD = 2.71), while 31 participants each received the storytelling with adaptive lighting (22 female, 9 male; age: M = 22.55, SD = 2.87) respectively without any additional lighting (26 female, 5 male; age: M = 21.87, SD = 1.48).

4.3 Results

All analyses were conducted using JASP[83] version 0.16, with a significance threshold set at .05. Descriptive statistics are presented in Table 3. First, assumptions checks were performed. Shapiro-Wilk tests indicated violations of the normality assumption for Transportation, Curiosity, and Anthropomorphism. Levene’s tests showed violations of the homogeneity assumption for Negative Affect, Perceived Intelligence, and Perceived Safety.

| Adaptive Lighting | Constant Lighting | No Additional Lighting | |||||

| M | SD | M | SD | M | SD | ||

| Transportationa | 4.44 | 1.33 | 4.69 | 1.20 | 4.56 | 1,16 | |

| CA: Temporal Dissociationa | 4.31 | 1.41 | 4.19 | 1.42 | 4.00 | 1.58 | |

| CA: Focused Immersiona | 4.50 | 1.14 | 4.31 | 1.39 | 4.43 | 1.17 | |

| CA: Heightened Enjoymenta | 5.01 | 1.23 | 5.12 | 1.05 | 4.86 | 1.21 | |

| CA: Controla | 3.10 | 1.29 | 2.80 | 1.04 | 2.56 | 1.03 | |

| CA: Curiositya | 4.71 | 1.42 | 4.96 | 1.47 | 4.49 | 1.24 | |

| Positive Affectb | pre | 2.58 | 0.76 | 2.71 | 0.72 | 2.67 | 0.73 |

| post | 2.57 | 0.66 | 2.72 | 0.75 | 2.60 | 0.81 | |

| Negative Affectb | pre | 1.37 | 0.46 | 1.41 | 0.38 | 1.29 | 0.31 |

| post | 1.27 | 0.42 | 1.17 | 0.20 | 1.19 | 0.25 | |

| Jovialityb | pre | 2.70 | 0.83 | 2.79 | 0.65 | 2.82 | 0.85 |

| post | 2.79 | 0.80 | 2.99 | 0.69 | 2.90 | 0.94 | |

| Serenityb | pre | 3.52 | 0.81 | 3.31 | 0.88 | 3.31 | 0.77 |

| post | 3.55 | 0.82 | 3.59 | 0.83 | 3.12 | 0.70 | |

| Attentivenessb | pre | 2.97 | 0.73 | 3.15 | 0.74 | 3.11 | 0.79 |

| post | 2.86 | 0.70 | 3.07 | 0.72 | 2.86 | 0.85 | |

| Anthropomorphismb | 2.08 | 0.77 | 2.15 | 0.75 | 2.08 | 0.62 | |

| Animacyb | 2.67 | 0.59 | 2.71 | 0.72 | 2.74 | 0.65 | |

| Likeabilityb | 3.92 | 0.63 | 4.08 | 0.49 | 4.17 | 0.48 | |

| Perceived Intelligenceb | 3.77 | 0.38 | 3.79 | 0.64 | 3.76 | 0.79 | |

| Perceived Safetyb | 3.47 | 0.80 | 3.57 | 0.76 | 3.19 | 0.73 | |

CA: Cognitive Absorption; a: Calculated values from 1 to 7; b: Calculated values from 1 to 5.

For storytelling experience, no significant differences were found when comparing Transportation between all three conditions (χ2(2) = 0.31, p = .855). In line, a planned contrast indicated no significant difference between the condition with adaptive lighting condition and the conditions with constant or no lighting (t(89) = -0.69, p = .494).

Concerning Cognitive Absorption, no significant group differences were revealed for Temporal Dissociation (F(2, 89) = 0.36, p = .701), Focused Immersion (F(2, 89) = 0.17, p = .842), Heightened Enjoyment (F(2, 89) = 0.37, p = .696), Control (F(2, 89) = 1.77, p = .177), or Curiosity (χ2(2) = 4.51, p = .105). Planned contrasts between the condition with adaptive lighting and the conditions with constant or no lighting also did not reveal significant differences for Temporal Dissociation (t(89) = 0.67, p = .504), Focused Immersion (t(89) = 0.46, p = .651), Heightened Enjoyment (t(89) = 0.07, p = .943), Control (t(89) = 1.68, p = .097),and Curiosity (t(89) = 0.02, p = .981).

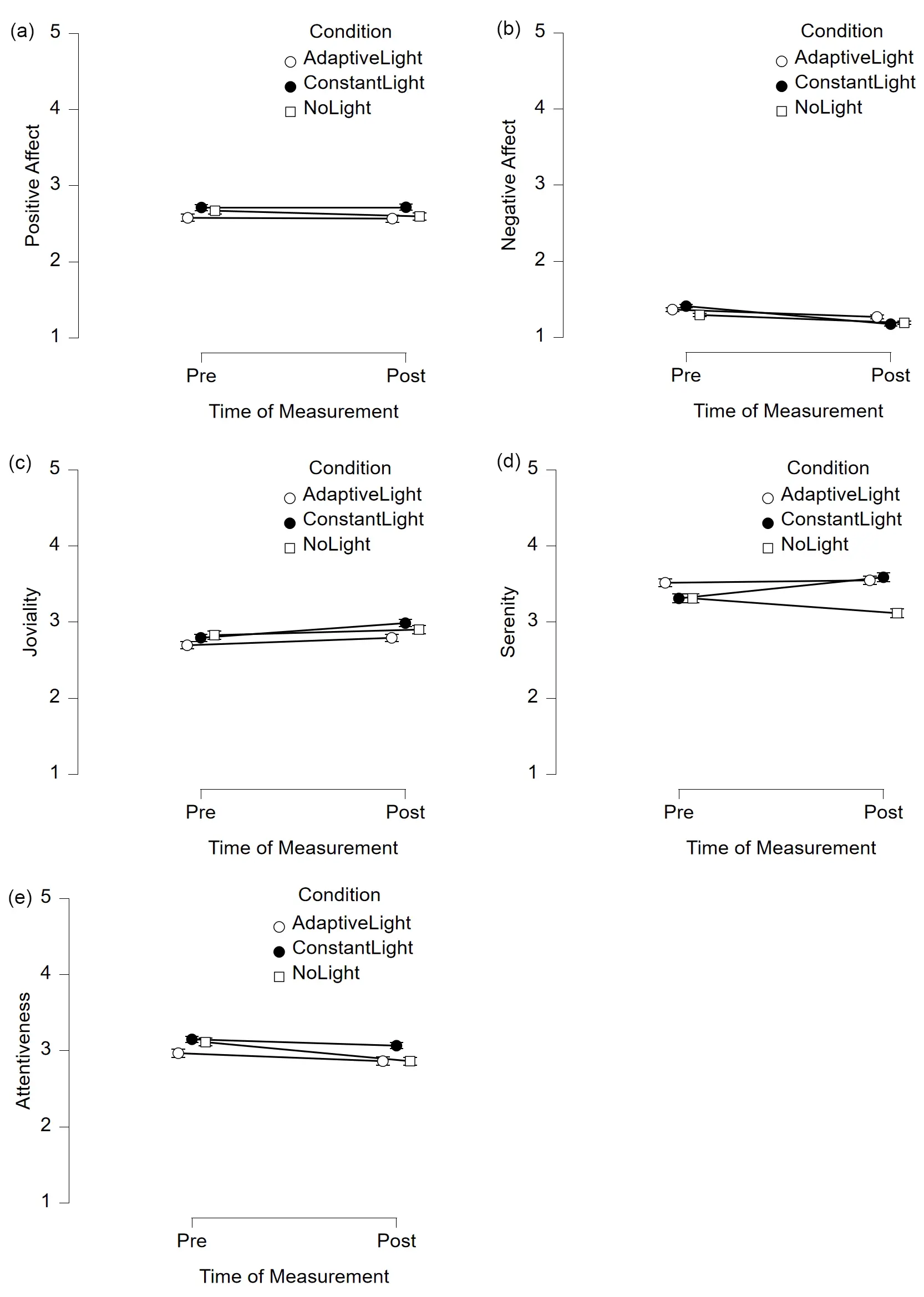

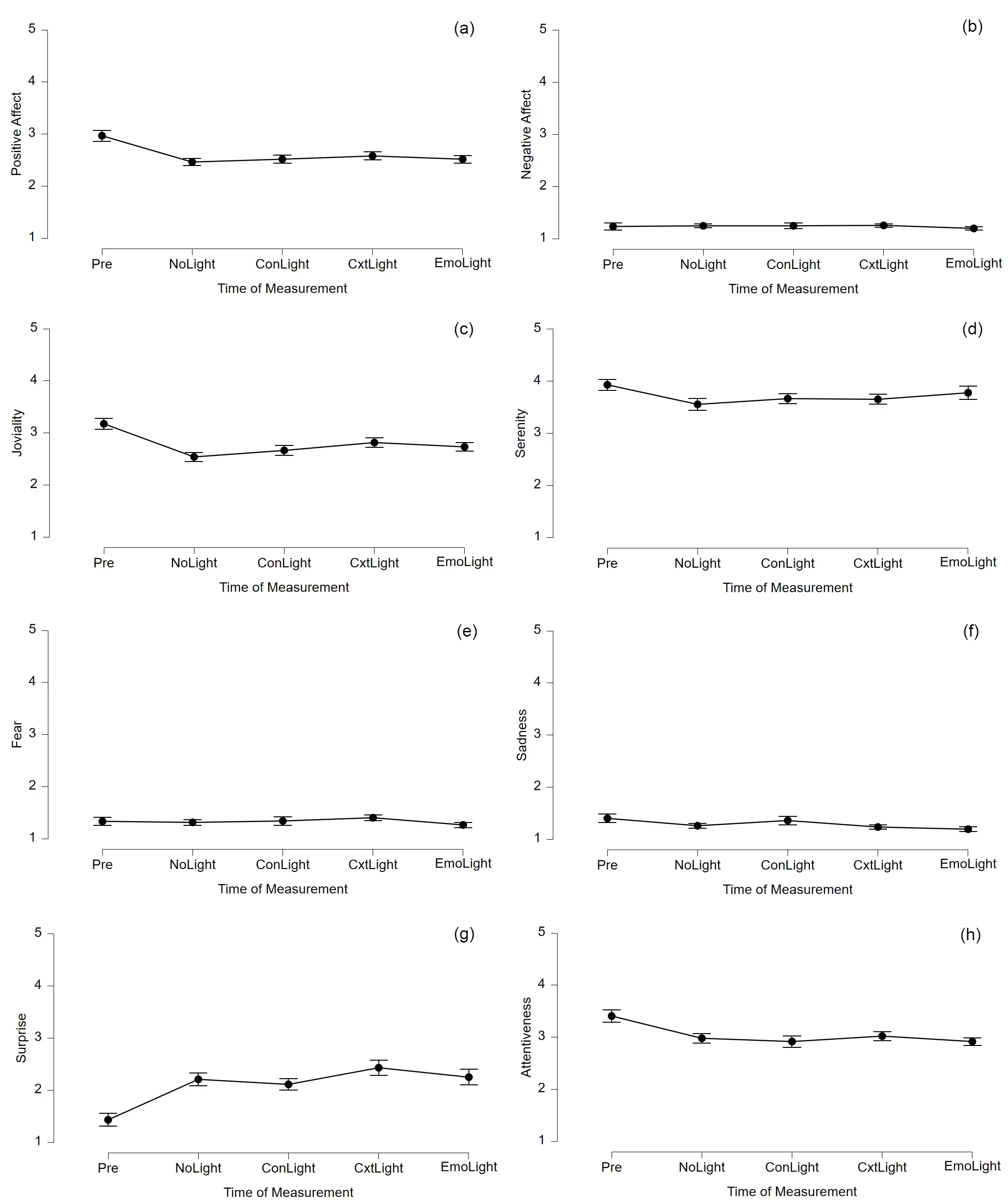

Regarding emotions, a mixed ANOVA indicated no significant main effect of lighting condition (F(2, 89) = 0.32, p = .726) or time of measurement (F(1, 89) = 0.37, p = .605) on Positive Affect. Also, no significant interaction effect was observed (F(2, 89) = 0.22, p = .806), and a planned contrast indicated no difference between the condition with adaptive lighting and the conditions with constant or no lighting (t(89) = -0.66, p = .509). For Negative Affect, no significant main effect of lighting condition was found (F(2, 89) = 0.42, p = .656), whereas a significant main effect of time was observed (F(1, 89) = 27.23, p < .001, ω2 = .04), indicating a general decrease of Negative Affect after the storytelling. No significant interaction effect emerged (F(2, 89) = 2.64, p = .077), and a planned contrast indicated no difference between the condition with adaptive lighting and the conditions with constant or no lighting (t(89) = 0.69, p = .491). For Joviality, again, no significant main effect of lighting condition was found (F(2, 89) = 0.33, p = .717), but a significant main effect of time emerged (F(1, 89) = 4.38, p = .039, ω2 = .01), with a general increase in Joviality after the storytelling. No significant interaction effect was indicated (F(2, 89) = 0.40, p = .673), and the planned contrast indicated no difference between the condition with adaptive lighting and the conditions with constant or no lighting (t(89)= -0.81, p = .421). For Serenity, no significant main effects of lighting condition (F(2, 89) = 1.57, p = .215) or time (F(1, 89) = 0.33, p = .569) were identified, but a significant interaction effect was identified (F(2, 89) = 4.12, p = .019). As displayed in Figure 2d, Serenity increased descriptively in the constant light condition, decreased in the no-light condition, and slightly increased in the adaptive light condition. However, Bonferroni-corrected post-hoc tests did not reveal any significant pairwise differences (ps >.05), and the planned contrast also showed no significant difference between adaptive and the other conditions (t(89) = 1.24, p = .220). For Attentiveness, no significant main effect of lighting condition was found (F(2, 89) = 0.58, p = .560), but again a significant main effect of time was identified (F(1, 89) = 6.56, p = .012). As displayed in Figure 2e, Attentiveness decreased descriptively in all lighting conditions, with the largest decrease occurring in the no-light condition. However, post-hoc pairwise comparisons revealed no significant differences (ps >.05). A planned contras did not indicate a significant different between the adaptive lighting condition and the conditions with constant or no lighting (t(89) = -0.86, p = .395).

Figure 2. Plots for pre- and post-measured emotions of Study I. (a) Descriptive plot for Positive Affect; (b) Descriptive plot for Negative Affect; (c) Descriptive plot for Joviality; (d) Descriptive plot for Serenity; (e) Descriptive plot for Attentiveness. AdaptiveLight: condition with adaptive lighting; ConstantLight: condition with constant lighting; NoLight: condition without additional lighting; Error bars represent the standard error.

Comparing robot perception, no significant group differences were found for Anthropomorphism (χ2(2) = 0.21, p = .899), Animacy (F(2, 89) = 0.08, p = .927), Likeability (F(2, 89) = 0.20, p = .195), Perceived Intelligence (χ2(2) = 0.16, p = .925), or for Perceived Safety (χ2(2) = 3.63, p = .163). Planned contrasts revealed no significant differences between the adaptive lighting condition and the conditions with constant or no lighting for Anthropomorphism (t(89) = -0.20, p = .843), Animacy (t(89) = -0.34, p = .736), Likeability (t(89) = -1.70, p = .092), Perceived Intelligence (t(89) = -0.02, p = .983), or for Perceived Safety (t(89) = 0.55, p = .584).

4.4 Discussion

We conducted a laboratory study to examine the combination of the well-researched biomimetic modality of body language together with the rather unexplored non-biomimetic modality of colored light in a robotic storytelling scenario. We used the Pepper robot, which employed emotion-conveying body language to tell a romantic story in three versions, (1) with emotion-driven colored lights, (2) with constant white lighting, (3) without additional illumination.

Concerning storytelling experience, we found no differences between the lighting conditions in terms of transportation or cognitive absorption, therefore H1a and H1b must be rejected. While our findings for transportation are in line with results reported by Steinhaeusser and Lugrin[19], who used the NAO robot’s eye LEDs as an additional colored modality, we were able to mitigate the negative effect they yielded for cognitive absorption. In addition, the values of transportation and cognitive absorption of the adaptive lighting condition were apparently higher than for the colored LED group. Thus, we conclude that even though our implementation of colored light for robotic storytelling did not yet positively influence recipients’ perception of the storytelling experience, it did not confuse them in contrast to colored eye LEDs.

Regarding emotions, we found no main effect of time on Positive Affect or Serenity over the storytelling, leading to the rejection of H2a and H2d. However, we did observe a significant decrease in Negative Affect as well as a significant increase in Joviality across all tested conditions. Thus, we can accept H2b and H2c, revealing a decrease of negative emotions and an increase of particular positive emotions due to robotic storytelling of romantic stories. Nevertheless, we cannot determine whether the positive emotions inherent in the romantic story were adopted by the recipients as is suggested for human oral storytelling[32], or whether robotic storytelling in general has a positive effect on recipients’ emotions regardless of the story genre. Future research utilizing different genres should be carried out to investigate both possibilities: the role of story genre on changes in specific emotions, and the overall positive effects of robotic storytelling on emotions compared to traditional human storytelling.

Concerning the effect of lighting conditions on altered emotions, we identified a relationship between changes in emotion from pre- to post-measurement and lighting condition for Serenity, but not for the other examined emotions (RQ1). Serenity tended to increase in the conditions with colored and constant light, but tended to decrease in the condition without any additional lighting. Serenity is often referred to as a state of inner peace[84] that is closely connected to harmony[85]. As harmony is the final state that is achieved at the end of our romantic story the additional lighting might have reinforced this feeling in the recipients. However, since we did not find a difference between the constant and colored lighting, this effect might be explained by an increase in attention toward the robotic storyteller triggered by the presence of light, independent of its color. Our results reveal that although attentiveness decreased in all three conditions (RQ2), the decrease was descriptively strongest in the condition without any additional light which is in line with this explanation. Thus, it is possible that even minimal additional lighting placed near the robot can enhance the storytelling experience.

Finally, we found no influence of lighting conditions on robot perception (RQ3). Comparing this result with the findings of Steinhaeusser and Lugrin[19], they reported a negative effect of applying colored light in the robot’s eye LEDs on Animacy. This negative effect disappeared when expanding the illuminated surface, reinforcing the suggestion that room illumination is better suited as a color and light modality for robotic storytelling than usage of eye LEDs. However, while Steinhaeusser et al.[20] revealed a positive effect of colored light on robot perception in terms of perceived competence, we were not able to indicate a positive influence on robot perception in terms of Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety. While this finding is surprising given the apparent similarity of competence and perceived intelligence at first glance, Scheunemann et al.[86] also reported a lack of correspondence between the two variables. It seems that, as storytelling is a social activity, the exploration of more socially oriented variables is more suitable. Future work should thus take further facets of robot perception into account to focus on the social aspects of the robotic storyteller. Similarly, the Anthropomorphism scale of the Godspeed questionnaire was already criticized for not covering subtle changes[87] and being correlated to its other scales[88], therefore other scales assessing anthropomorphism should be taken into account. Furthermore, our restrictive annotation process might have influenced our results. As fewer tokens were annotated with emotion labels and the majority of them were labeled as neutral and thus matched to white light only, less colored light was integrated into the storytelling compared to previous work by Steinhaeusser et al.[20]. It can therefore be suggested that our conservative consensus-based labeling approach may not have been suitable for this use case. Future iterations might rely on a lower number of annotators but consider all of their decisions.

In summary, while we found no significant differences between the lighting conditions on storytelling experience and robot perception, we observed a trend towards increased serenity in the colored and constant light conditions but a decrease in the condition without additional light. This suggests that emotional content from the story may have been transferred to recipients more effectively in the illuminated conditions, potentially due to enhanced focus induced by the lighting Moreover, we indicated a general decrease of negative emotions and an increase of specific positive emotions over the storytelling in all conditions, suggesting a general positive effect of robotic storytelling on emotions, which is in line with related studies[76,89] and highlights the potential of this field. However, regarding the integration of emotion-inducing colored light, applying guidelines from virtual environment research alone appears insufficient for robotic storytelling. Therefore, future work should empirically determine appropriate light colors that enhance and complement the emotional bodily expressions of a robotic storytellers, emphasizing the expressive potential of colored light.

5. Study II: Determining Light Colors for Emotion Expression

As integrating emotion-inducing colored light based on guidelines developed for virtual environments proved insufficient for improving robotic storytelling, we focused on reinforcing the expressiveness of the colored light in multimodal storytelling, facilitating the emotion recognition of the robotic storyteller. Therefore, we conducted an online study empirically derive light colors supporting emotional expressions of our robotic storyteller Pepper. While the video-based approach is not uncommon in related studies[55,90-92], the advantages of robots’ physical embodiment do particularly unfold in live and in-person interaction[93-96]. In line, participants tend to prefer in-person studies over live studies with robots[97]. However, video trials have been shown to be representative for in-person studies[97-100].

We based our approach on works by Song and Yamada[53] and Steinhaeusser et al.[89,91]. Working with a Roomba vacuum cleaner robot, Song and Yamada first derived emotional expressions separately via motion and light color. In a subsequent study both modalities were combined to compare the initial recognition rates of the unimodal expressions with the ones from the combined multimodal expressions, revealing that while some emotions were better recognized from the multimodal expression, some were better expressed using only motion. We adapted this approach by combining previously established emotional bodily expressions for the Pepper robot[89] with light colors derived from the literature and comparing their recognition rates with those from the original study.

H3: Adding colored light facilitates emotion recognition from robotic expressions.

5.1 Materials

To acquire comparable recognition rates, we followed the empirical online approach of Steinhaeusser et al. using pictures of the robot’s emotional expressions illuminated by colored lights[89,91].

5.1.1 Concept for emotion-driven light colors

In general, warm, i.e. more reddish, colors are suggested to be more stimulative[101,102] and are therefore also associated with more active emotions of higher arousal[103], whereas cool colors seem to be more calming and associated with passive moods[102], but at the same time they attract more attention than warm colors[101]. These effects were already transferred to new media such as mixed reality, as Betella et al.[61] reported warm colors inducing more arousal than cold colors when used in an interactive colored floor. The associations between colors and emotions seem to be consistent across different media[104], thus also findings on emotion-color-associations from other presentation modes than light are taken into account for conceptualizing our stimulus materials as they might transfer to light in supporting emotion recognition within robotic storytelling. Therefore, we combined findings from color theory, related study results, and findings from other media to conceptualize our color combinations for the online study. All color combinations derived from the literature are displayed in Table 4.

| Emotion Label | Color Combination | Associations | ||

| Version | Spotlight | Ambient Light | ||

| Vigilance | 1 | white | pale blue | White: cleanliness and honesty[110]; Blue: comfortable for vision[109] |

| 2 | white | pale orange | Orange: upbeat[113], energetic[102,103],vitality[101], vigilance[74] | |

| 3 | pale blue | pale yellow | Yellow: attention grabbing caution sign[102,111], awareness[112], focusing, feeling awake[109] | |

| Admiration | 1 | pale green | warm yellow | Yellow: cheerful, celestial[102]; Green: health[115], trust[110], admiration[74] |

| 2 | warm orange | warm yellow | Orange: fanciness and beauty[101] | |

| 3 | pink | warm yellow | Pink: love, temptation[101], and romance[114] | |

| Amazement | 1 | pale blue | white | Blue: amazement[74]; White: excitement[103] |

| 2 | pale purple-blue | pale blue | Purple: magical and spiritual[116] | |

| 3 | warm yellow | white | Yellow: inspiring[102], surprise[14] | |

| Ecstasy | 1 | warm orange | bright yellow | Yellow: sun[111], joy[17,67], jolliness[101], and happiness[14,54,108,109] |

| 2 | pale yellow | bright yellow | Orange: high arousal and happiness[103], hilarity and exuberance[102] | |

| 3 | green | bright yellow | Green: health[115], refreshing[102] | |

| Loathing | 1 | green-yellow | violet | Green & Yellow: jealousy[101,118,119], envy[117,120], toxicity[115], disease[102], ghastliness[102] |

| 2 | orange | green-yellow | Orange: distress, being upset[123] | |

| 3 | pink | orange | Violet: hatred[122]; Pink: controversial[124] | |

| Terror | 1 | purple | blue | Red: danger[101,102], signal to stop[126] |

| 2 | blue | red | Blue: powerlessness[106] | |

| 3 | red | purple | Purple: death[116], insecurity[101], loneliness and desperation[102] | |

| Rage | 1 | red | dark blue | Red: rage[102], anger[14,107], flushing with aggression[54], hostility[15] |

| 2 | red | red | Blue: powerlessness[106], coldness[106] | |

| 3 | red | white | White: emptiness and loneliness[108] | |

| Grief | 1 | white | gray | Blue: coldness[106], powerlessness[106], gloom[102], sadness[14,103,107], and sorrow[101] |

| 2 | pale blue | gray | Gray: sadness[14] | |

| 3 | dark blue | dark blue | White: emptiness and loneliness[108] | |

One of the most popular color-emotion associations is the one between blue and sadness-related subemotions, which is also utilized in Disney’s Pixar movie Inside Out[105] for the figure Sadness. Blue is the coldest color of the spectrum[106] and is referred to as the “quintessential color for powerlessness”[106]. It gives the impression of gloom[102] and is associated with low arousal[103] as found in the associated subemotions of sadness[103] and sorrow[101]. Regarding its effects, blue tends to induce inertia[106], and in the form of light, it can reduce pleasantness[67]. In studies with human facial expressions, sad faces were associated with blueish[14,107] but also grayish colors[14]. In the context of robots, blue also was reported to be appropriate for depicting sadness[13,17] and blue-purple for grief [16]. Therefore, we created three versions of light combinations for grief integrating blue, gray, and white light—due to their association with emptiness and loneliness[108] which are connected to grief.

However, blue is a manifold color, and “the slightest change in that color, therefore, can completely alter how you respond to it”[106]. While dark blue often gives negative impressions, blue is also associated with the ocean and the sky, which can have comforting effects[108]. Pale blue was also shown to be comfortable for vision[109], supporting attention. We combined two of the versions with white, as it is associated with cleanliness and honesty[110]. In contrast to pale blue, yellow is an attention-grabbing color, used as a caution sign not only by humans in industrial societies but also in nature[102,111]. Moreover, yellow is associated with awareness[112]. For vigilance, we tested both blue and yellow as well as their combination due to these attention-related effects. However, to make the yellow aesthetically more pleasing, we turned it into a pale yellow[111]—a color that was already reported to benefit learning performance, aid task focus, and promote alertness[109]. Lastly, as orange was referred to as upbeat[113] and energetic[102,103], is associated with vitality[101], and also depicts vigilance in Plutchik’s Wheel of Emotions[74], so we also implemented a version with orange light.

Orange is also associated with fanciness and beauty[101], thus we also used it for depicting admiration. We combined it with yellow, which has been reported to give cheerful and celestial impressions[102] and was previously successfully used for a robot to display admiration. For another version, yellow was also combined with pink which is psychologically associated with love, temptation[101], and romance[114]. Lastly, we combined yellow with green, as it signals health[115], can be associated with trust[110], and is used for admiration in Plutchik’s Wheel of Emotions[74].

For amazement, we again used the respective color from Plutchik’s Wheel of Emotions—blue. We combined it with white, which is associated with excitement[103], as well as purple which is referred to as magical and spiritual[116]. Lastly, we combined white with inspiring yellow[102], which has been reported to be associated with surprised faces[14] and to increase the intensity of surprise in robots’ faces[54].

Yellow is furthermore identified with the Sun[111] and therefore evolutionarily associated with joy[17,67], jolliness[101], and happiness[14,54,108]. Yellow-colored environments even increase our heartbeat[109]. Thus, it is not surprising that Disney used yellow for the character Joy in the Pixar movie Inside Out[105]. We used yellow for our implementation of the most intense subemotion of joy, namely ecstasy, in our social robot, too, as suggested by Terada et al.[16]. Again, the color’s alarming effect can be moderated by desaturation[111], as pale yellow is still associated with happiness[109], leading to our first combination. Next, orange is also associated with high arousal emotions such as happiness[103], but also with hilarity and exuberance[102], providing the base for our second color combination for ecstasy. Lastly, we combined yellow with green, which signals health[115] and gives a refreshing impression[102]. All color combinations were balanced in their tone[67]).

However, yellow is also associated with negative traits such as jealousy[101] and envy in some cultures[117]. More popular is the connection between green and jealousy or related emotions[118,119], due to the proverbial “green-eyed monster” or “green with envy”[120]. Green also signals poison or toxicity, for instance in spoiled meat[115], and disease[102], a connection again utilized in Disney’s Pixar movie Inside Out[105] for the character design of Disgust as well as for the people-hating Grinch[121]. Also, human faces depicting disgust were shown to be associated with both yellow and green[14]. Moreover, green is connected to ghastliness[102]. Given these associations, we used a green- yellow tone for the most intense sub-emotion of disgust, namely loathing. We combined it with violet, as it is reported to be associated with hatred[122], and is used within Plutchik’s Wheel of Emotions for illustrating loathing. In a second version, we combined it with orange, which is associated with distress and being upset[123]. In our last version, we combined orange with pink, as it is one of the most controversial colors[124], evoking strong feelings[125], which might reinforce the depicted emotion.

For terror, we created two light color combinations using red, as it is directly connected to danger[101,102], signaling us to stop because of dangerous circumstances[126]. This connection can even be visualized as the human heartbeat increases in red environments[109,126]. As the combination with unbalanced light temperatures creates imbalance and tension[67], we combined red with blue, which forms the other end of the light spectrum[102] and is associated with powerlessness[106]. Moreover, we combined red with purple, which is associated with death, especially in movies[116], and gives the impression of insecurity[101], loneliness and desperation[102]. Furthermore, purple was already used to display terror in a robot[16].

For rage, again red is a prominent color[102], that is associated with anger across diverse cultures[14,107]. This can easily be explained by the human face getting red when in anger and aggression[54]. But red is not only a sign of anger and related feelings, it also induces it[126]. Also in HRI, several studies reported that adding the color red to a robot’s expression helps to recognize the intended emotion of anger[13,17] or rage[16], increases the perceived intensity of the depicted emotion[54], and shows hostility[15]. Therefore, we used red light for all tested variations of colored light combinations. For diversity, we also combined it with white and blue light given the above-described associations with negative states. For the neutral expression, only one version of colored light was created. We used white light, as the color was already used for expressing neutrality within a robot[107]. Furthermore, the lack of light was viewed unfavorably in our initial study, so we proceeded with white lighting to maintain consistent brightness.

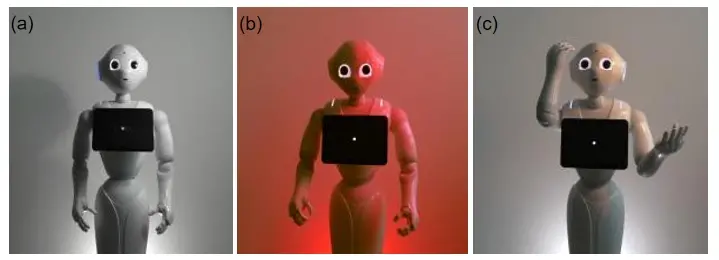

5.1.2 Implementation

We created three versions of each of the eight original emotional expressions[89] for the eight inner subemotions in Plutchik’s model, using the different light colors determined in section 5.1.1. We used the same lighting setup as in Study I—one spotlight and one ambient light-, the original pose library by Steinhaeusser et al.[89] within Choregraphe 2.5.10.7[127], and two Tapo E27 bulbs controlled with the Tapo-Link smartphone application. Following this approach, 25 pictures of the robot—three for each emotion and one for “neutral”—executing the original bodily expressions illuminated by the colored lights were taken with constant camera setup and background. All 25 pictures can be found in our online repository (https://dx.doi.org/10.58160/sr1wkzg92vewvry4) as well as in appendix B. Representative pictures are displayed in Figure 3.

Figure 3. Exemplary pictures of stimulus material from Study II. (a) Picture with neutral expression and white ambient and spotlight; (b) Picture with rage expression and red ambient and spotlight; (c) Picture with amazement expression and white ambient and yellow spotlight.

5.2 Methods

In an online survey, participants were asked to assign one emotion label to each of the pictures showing bodily expressions combined with colored light for validation.

5.2.1 Measures and procedure

Upon entering the webpage hosted using LimeSurvey[128], participants first gave informed consent. Within the survey, one picture of a combined expression was presented without any contextual information, along with a list of the eight inner Plutchik emotions and two other options; a “neutral” label and the answer “I don’t know”. Participants were asked to choose what the depicted expression might represent. After they chose the emotion label they thought fit best, they proceeded to the next one. At the end of the survey, participants were asked to provide demographic data.

5.2.2 Participants

In total, 101 persons participated in the survey. Twenty participants self-reported as male (age: M = 23.45, SD = 2.19), eighty as female (age: M = 20.85, SD = 1.62) and one participant identified as diverse (age: 21). The overall mean age was 21.37 years (SD = 2.01).

5.3 Results

All analyses were carried out using Microsoft Excel 2016 and JASP[83] (version 0.16), and a significance level of .05. Recognition rates achieved in Study II are presented in Table 1, including rates with all labels (column 3) and rates excluding the “I don’t know” option (column 4),in comparison to the original study, which used the bodily expressions without light (column 5). In general, multinomial tests showed that label assignment frequencies differed significantly across all 25 pictures, ps < .001. In addition, we statistically compared the assignment frequency of the intended emotion versus other emotion labels across the three color combinations for each target emotion using chi2-tests. In addition, we statistically compared the assignment frequency of the intended emotion versus other emotion labels across the three color combinations for each target emotion using chi2-tests.

For vigilance, none of the color combinations was assigned to the respective label as the most frequently. For version 1, most participants chose the label “neutral” (n = 22), while for version 2 “amazement” (n = 20) and for version 3 “terror” (n = 22) were chosen most frequently. We found no significant relationship between recognition rates and color combination, χ2(2) = 1.97, p = .374. Similarly, all versions of the admiration expression scored higher on other emotion labels than on “admiration”. Version 1 was most frequently associated with “vigilance” (n = 30), version 2 with “amazement” (n = 28), and version 3 again with “vigilance” (n = 29). Again, no significant relationship between recognition rates and color combination was obtained, χ2(2) = 1.97, p = .373. Lastly, also all color combinations created for loathing were not assigned to the respective label most frequently, all versions scored highest on “terror” (nv1 = 56, nv2 = 57, nv3 = 57). No significant association between recognition rates and color combination was found, χ2(2) = 0.86, p = .650.

For amazement, all color combinations were most frequently assigned to the respective label, with version 3 exceeding the original recognition rate of the unimodal bodily expression when excluding the “I don’t know” label from the calculation. However, our test results indicated no significant relationship between recognition rates and color combination, χ2(2) = 3.67, p = .186. For rage, also all combinations were mostly recognized as “rage”, with version 2 exceeding the original recognition rate from the unimodal expression, and a significant relationship between recognition rate and color combination, χ2(2) = 6.91, p = .032. In contrast, for ecstasy, again all color combinations were assigned to the respective emotion label most frequently with a significant relationship of recognition rates and color combination (χ2(2) = 6.05, p = .049), but the recognition rates were lower than in the original study. The same pattern was found for grief, however, the relationship between the recognition rates and the color combinations did not reach significance, χ2(2) = 5.49, p = .064. Lastly, the neutral multimodal expression was most often assigned to the “neutral” label, exceeding the original unimodal recognition rate.

5.4 Discussion

We conducted an online survey to determine which light colors supporting the recognition of emotions expressed by our robotic storyteller. Our results show that only three examined expressions achieved improved recognition rates compared to the initial unimodal expressions[89]. This was the case for the two emotional expressions of amazement and rage as well as the neutral expression not conveying any emotion. For vigilance, admiration, ecstasy, terror, and grief the recognition rates declined when adding the light modality with the highest drop for terror. For loathing, the added lighting even led participants to assign a completely different emotion. Therefore, H3 can be partly accepted.

The significant difference in recognition rates among the three versions of rage reflects the increased recognition rate compared to the unimodal expression being only evident in versions two and three, in which red light was added to the expression. This finding is in line with related works. Song and Yamada[53] as well as Lffler et al.[17] already revealed the high impact of the colored lighting for this emotion. This might be explained by the human bodily reaction of facial flushing when enraged[17,54]. Our extension of the color to the room’s illumination might have further resembled the visual alarm signal associated with red[126], which is even more visually intense[126] than when it was only attached to the robot. In contrast, no significant differences were found between recognition rates for the three versions of the sub-emotion amazement, the most intense level of the basic emotion surprise[24,74]. However, slightly improved recognition rates were observed when being depicted with yellowish spotlight and white ambient light, an association in line with findings for colored framing of human facial expressions[14], although such a color cue has no direct analogue in human communication. This finding is even more interesting given that while rage or the related basic emotion of anger is easily recognized from a robot’s bodily expressions[57,91,129], the recognition of amazement seems to be rather difficult[91]. Also, Terada et al. reported that when using only the light modality amazement often “might be recognized as surprise, joy, or expectation”[16]. Although our recognition rates showed only minor improvements after excluding uncertain answers from the dataset, this type of confounding did not occur in our study, strengthening the idea of combining bodily expressions with colored light.

In a related study, Löffler et al.[17] also reported that joy, fear, and sadness to benefit from the color modality which was not the case with the related emotions investigated in our survey. In addition, although there was a significant relationship between recognition rate and color combination for ecstasy, suggesting a better suitability of the green/yellow combination over the others, the recognition rate was lower than for the unimodal expression. One possible explanation might be the intensities of the emotions in question, which were higher for our sub-emotions utilized in our study. Most surprisingly given the literature is the decrease of the recognition rate for grief when adding colored light. Related works all agree that blue is closely connected to sadness and sorrow[14,16,17,101] and has already been shown to be sufficient for emotion expression with robots[13,53]. In our case, the dark blue light did not improve emotion recognition. However, next to the higher intensity inherent to our emotion label, the recognition rate of the original unimodal expression for grief was already so high, that it was hard to surpass.

Lastly, the notable increase in recognition for the neutral expression combined with white light aligns well with our expectations. Participants seemed to interpret white as the default illumination, probably because the modality of light cannot be omitted when the robot must remain visible. Similar to other modalities such as body language, light may be considered a modality that “cannot not communicate”[130]. It seems to convey a message, even if it is only improving emotion recognition for single states.

Given these results, we recommend the use of colored lighting to support emotion recognition from robotic bodily expressions for the categories of amazement, rage, and neutral. The exact color combinations and HSB values can be retrieved from Table 5.

| Spotlight | Ambient Light | |||

| Hue | Saturation | Hue | Saturation | |

| Amazement | 47 | 90 | 0 | 0 |

| Rage | 2 | 100 | 2 | 100 |

| Neutral | 0 | 0 | 0 | 0 |

6. Study III: Evaluating Colored Lights in Robotic Storytelling

While related studies show positive effects of integrating colored light in robotic storytelling[20], the results from our first user study in this paper were relatively inconclusive, showing only a positive effect on individual emotional states. Within both the former work by Steinhaeusser et al.[20] and our own first user study, decisions on colors associated with story emotions were based on guidelines for designing emotion-inducing virtual environments. Nevertheless, given our results, this approach does not appear to be effective with the Pepper robot when using emotional body language. Therefore, we revised our color-emotion associations within an empirical online study. As the light-supported multimodal expressions had so far only been examined in isolation in the online setting, we conducted a follow-up laboratory user study to examine them in the context of robotic storytelling.

It might be the case that not the color-emotion-associations themselves, but the entire concept of emotion-driven lighting is not appropriate for improving robotic storytelling. While studies show that robotic storytellers should display emotions[11,49], and that colored lights can improve a robot’s expressiveness[13,17], context-based room illumination might improve the storytelling experience more effectively than emotion-driven lighting. For audiobook reception, studies show that visual anchors depicting story context such as persons, actions, or environments added by augmented reality can improve not only recall but also narrative engagement without influencing users’ cognitive load[131]. While colored light cannot depict persons or actions, it can represent environments, for example illustrating the greenery of a forest or the blueness of the ocean[103]. Therefore, we compared our emotion-based approach for integrating colored light into robotic storytelling to a context-based approach using colored light to illustrate story environments. As control conditions, we again used a constant white light as well as a version without any additional lighting. Therefore, we implemented four conditions: (1) emotion-based lighting, (2) context-based lighting, (3) constant lighting, and (4) no additional lighting. To allow recipients to express their liking for the different approaches in comparison, we used a within-subjects design in a laboratory in-person study. All sub-studies were deemed ethically sound by the local ethics committee.

Based on our previous studies and related work we postulate the following hypotheses:

• H4: Adding emotion-based or context-based colored lighting will enhance storytelling experience compared to constant or no additional lighting.

• H5: Adding emotion-based or context-based colored lighting will increase emotion induction compared to constant or no additional lighting.

• H6: Adding emotion-based or context-based colored lighting will improve robot perception compared to constant or no additional lighting.

Moreover, we were interested in the differences between the emotion-based and context-based approach:

• RQ4: Does context-based colored lighting outperform emotion-driven colored lighting?

• RQ5: Which presentation style is preferred by recipients?

6.1 Materials

This time, we utilized stories from the fantasy genre, as the importance of the environmental setting is higher in this genre[132], and thus fantasy stories typically include more details on the places and environments in which the story unfolds.

6.1.1 Concept

Due to the widespread popularity[133] and the positive remarks reported in related studies[89], we selected the Harry Potter universe as the narrative framework for our study. We used short stories from the Wizarding World’s website (former Pottermore) written by J.K. Rowling. These stories provide descriptions of the lesser-known wizarding schools.

Story Selection. Since we used a within-participants design, four different stories were assigned to the four conditions. We conducted an online survey to ensure that the four stories did not differ in story liking, storytelling experience, or emotion induction. To ensure we had four comparable stories, we tested five stories, keeping one as a backup. All five stories, Uagadou[134], Beauxbatons[135], Castelobruxo[136], Durmstrang[137], and Mahoutokoro[138], were approximately equal in length.

The survey followed a within-subjects design. When accessing, participants provided informed consent. They then read the first short story presented as plain text. Afterwards, they answered an attention check question about story detail, completed the previously used questionnaires on Transportation[79] and emotions[80] (i.e. Positive and Negative Affect,) rated their liking for the story (“I liked the story.”) on a five-point Likert scale (1: “strongly disagree” and 5: ”strongly agree”), and stated whether they knew the story before. This process was repeated for each story with the order of stories randomized. Lastly, participants provided demographic data and were thanked for their participation.

Fifteen participants with a mean age of 21.93 years (SD = 2.28) took part in the study. Four participants self-identified as male (age: M = 23.00, SD = 1.83), while ten participants self-identified as female (age: M = 21.60, SD = 2.50). One participant identified as diverse gender (age: 21).

A Bayesian RM-ANOVA was conducted for Transportation, the Bayes factor indicated that data best support the null model (BF10 = 1.00). The model including the main effect of story was less likely (BF10 = 0.15), suggesting evidence for H0. The same pattern was found for both Negative Affect (BF10 = 0.21 for the model including the effect of story), and Positive Affect (BF10 = 0.08), and story liking (BF10 =0 .09). Thus, we conclude that the data do not support the existence of differences between the stories. Descriptive data are displayed in Table 6.

| Beauxbaton | Castelobruxo | Durmstrang | Mahoutokoro | Uagadou | ||||||

| M | SD | M | SD | M | SD | M | SD | M | SD | |

| Transportationa | 4.27 | 1.32 | 4.11 | 1.11 | 4.61 | 1.35 | 4.19 | 1.33 | 4.21 | 1.54 |

| Positive Affectb | 2.42 | 1.03 | 2.37 | 0.76 | 2.36 | 0.99 | 2.49 | 0.73 | 2.36 | 0.77 |

| Negative Affectb | 1.09 | 0.17 | 1.14 | 0.29 | 1.18 | 0.26 | 1.08 | 0.11 | 1.13 | 0.33 |

| Story Likingb | 3.47 | 1.30 | 3.53 | 1.06 | 3.73 | 1.10 | 3.67 | 1.05 | 3.47 | 1.13 |

a: Calculated values from 1 to 7; b: calculated values from 1 to 5.

We discarded the short story about the Durmstrang Institute due to its more negative tone compared to the others. Thus, we used the stories about Uagadou, Beauxbatons, Castelobruxo, and Mahoutokoro within our study.

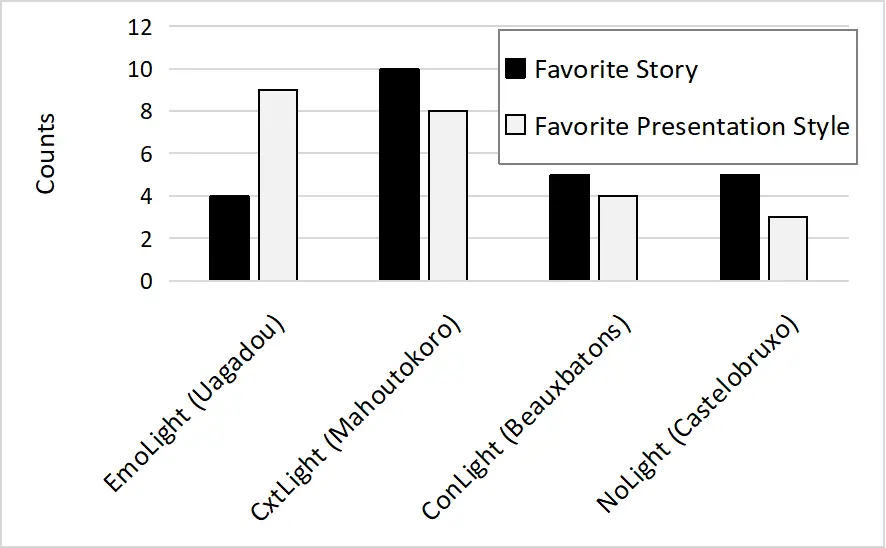

Emotional Annotation. For the process of annotating emotions to the four short stories, we followed the manual annotation approach by Steinhaeusser et al.[89,91]. We used the same tokenization method and emotion labels as in Study I (see section 4.1.1). Thirteen annotators with a mean age of 22.15 years (SD = 2.15) were acquired. Eleven annotators self-identified as female (age: M = 21.64, SD = 1.86), while two annotators self-identified as male (age: M = 25.00, SD = 1.41). No annotators identified as diverse gender.